Amazon SageMaker is one of the most popular managed machine learning services, enabling teams to build, train, and deploy models at scale. But while SageMaker simplifies the ML workflow, understanding its pricing can be tricky. Costs vary depending on instance types, training jobs, endpoints, and storage, making it essential to know how SageMaker pricing works before running workloads.

In this guide, we break down everything you need to know about Amazon SageMaker pricing and share strategies to optimize Machine Learning costs without sacrificing performance.

Amazon SageMaker Pricing Explained

Amazon SageMaker is a fully managed service that provides a wide range of tools for high-performance, cost-effective machine learning across various use cases. It enables users to build, train, and deploy models at scale through an integrated development environment (IDE) that includes Jupyter notebooks, debuggers, profilers, pipelines, and other MLOps capabilities.

SageMaker billing is based on a pay-as-you-go model. You pay only for the resources you use—there are no upfront fees or long-term commitments. This on-demand model allows teams to scale their machine learning workflows dynamically, matching costs to actual usage. If you’re unsure whether SageMaker meets your needs, you can start with the

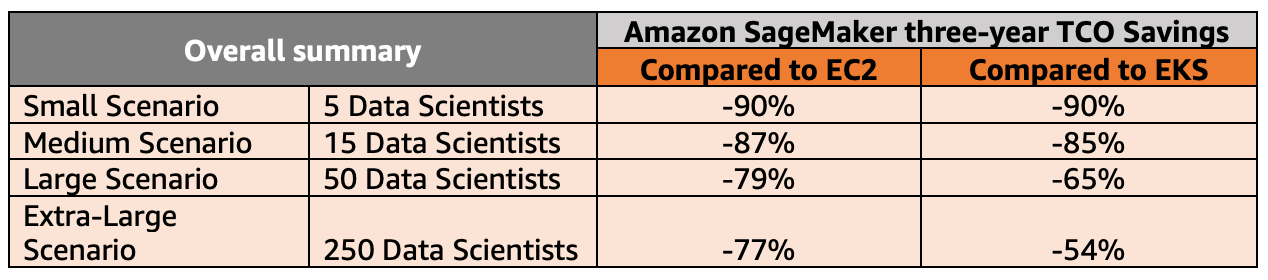

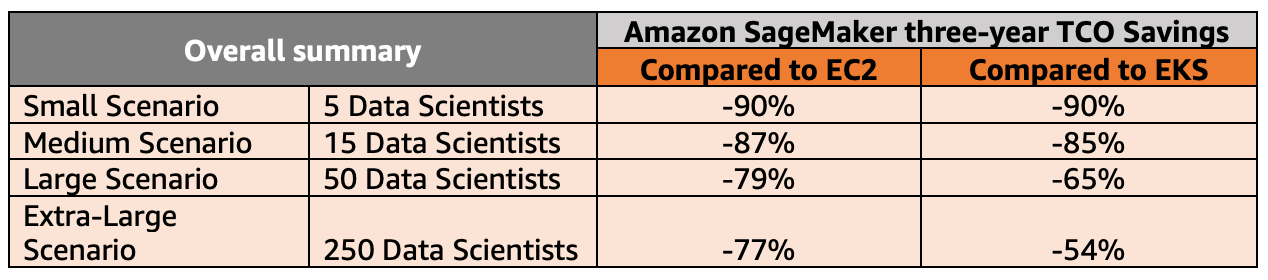

Amazon SageMaker Free Tier, which provides a limited amount of compute and storage for testing. This lets teams experiment with features and workflows before committing to larger workloads. From a total cost of ownership (TCO) perspective, SageMaker can help organizations reduce the need for extensive data science infrastructure and specialized personnel. By automating many aspects of the ML lifecycle—such as model training, tuning, and deployment—SageMaker can accelerate development while lowering operational overhead. This makes it a compelling choice for teams looking to scale machine learning efficiently.

How Does Amazon SageMaker Pricing Structure Work?

Amazon SageMaker follows an On-Demand, pay-as-you-go pricing model, meaning your costs depend entirely on how you use the service (we’ll cover commitments like Savings Plans in the next section). AWS Sagemaker Pricing varies based on the specific SageMaker features you leverage, the type and size of ML instances you choose, the region in which you run them, and the duration of use. Each component is billed separately. In practice, most of your cost will be driven by the compute resources you run (especially training jobs and inference endpoints), how long those resources stay active, and any large datasets you store or process through SageMaker. The table below breaks down the key SageMaker services & components, typical instance types, rates, and key tips to optimize costs.

| Component | What You’re Paying For | Example Instances | Typical Pricing (US East, Ohio) | Usage Notes / Tips |

| Notebook Instances | Compute resources for Jupyter notebooks | ml.t3.medium, ml.m5.large, ml.c5.large | $0.046 – $0.102/hour | Shut down idle notebooks; use smaller instances for exploration and testing. |

| SageMaker Studio | IDE environment usage (compute underlying instances) | ml.t3.medium, ml.m5.large | $0.10 – $0.115/hour | Studio itself has no cost; billing is for the underlying instances. Free tier: 250 hours/month. |

| Training Jobs | Compute for model training; charged per instance × duration | CPU: ml.m5.large, GPU: ml.p3.2xlarge | $0.115/hour (m5.large), $3.825/hour (p3.2xlarge) | Distributed training costs multiply by the number of instances. Stop jobs when complete. |

| Real-Time Inference Endpoints | Dedicated compute for serving models | ml.m5.xlarge, ml.p3.2xlarge | $0.115 – $3.825/hour | Idle endpoints still bill; consider auto-scaling or serverless endpoints for low-traffic models. |

| Serverless Inference | Compute billed per invocation duration | N/A | 150,000 seconds free/month, then usage-based | Ideal for intermittent requests; no instance management needed. |

| Batch Transform | Compute for batch predictions | ml.m5.large, ml.c5.large | $0.115 – $0.102/hour | Cost depends on instance type, number of instances, and total batch processing time. |

| Sagemaker Data Processing Jobs | Compute for data preprocessing, ETL, feature engineering, and batch workflows | ml.m5.xlarge, ml.c5.2xlarge | $0.23 – $0.34/hour | Runs on dedicated instances per job; billed per instance-hour. Great for ETL and feature engineering; size jobs correctly and run only when needed. |

| Data Storage | Storing datasets, model artifacts, and endpoints | Amazon S3 | $0.023/GB/month (standard S3) | Clean up unused models and datasets to save costs. |

| Data Transfer | Moving data in/out of SageMaker or between regions | N/A | Varies by volume and region; e.g., $0.09/GB outbound | Keep large datasets in the same region as your instances to reduce transfer costs. |

| Data Labeling (Ground Truth) | Cost per labeling task | N/A | ~$0.10 – $1.00 per labeled object (varies by complexity) | Useful for creating high-quality training datasets. |

| Data Wrangler | Compute for preparing, transforming, and cleaning data | ml.m5.4xlarge | $1.20/hour | Free tier: 25 hours/month. |

| Feature Store | Storage and API calls for features used in models | N/A | 10M write units, 10M read units, 25 GB free/month; beyond that: $0.005 per 1,000 units | Use only necessary features to control costs. |

| JumpStart Models | Pre-built Machine Learning models and example notebooks | N/A | Charged per instance used during execution | Can save development time but still incur instance costs. |

SageMaker Savings Plans

While SageMaker On-Demand pricing offers flexibility, Savings Plans let you reduce costs if you have predictable workloads. With a Savings Plan, you commit to a certain level of usage (measured in $/hour) for one or three years. In return, you can save up to 64% compared to On-Demand rates.

Key features of SageMaker Savings Plans:

- Applies to multiple workloads: Savings Plans cover SageMaker Studio notebooks, training, real-time and batch inference, Data Wrangler, processing jobs, and more.

- Flexible instance usage: You can switch between instance types, sizes, and regions without losing your discounted rate. For example, a commitment made for a CPU-based ml.c5.xlarge training instance can apply to an ml.Inf1 inference instance in another region.

- Payment options:

- All upfront: Pay the full commitment upfront for the maximum discount.

- Partial upfront: Pay 50% upfront and the rest monthly.

- No upfront: Pay monthly without any upfront cost, while still receiving Savings Plan discounts.

Savings Plans are best suited for teams with steady, predictable SageMaker workloads. For experimental projects or intermittent workloads, On-Demand pricing may remain more cost-efficient.

AWS SageMaker Free Tier

SageMaker AI is free to try — here’s what you get as free usage.

| Amazon SageMaker AI capability | Free Tier usage per month for the first 2 months |

| Studio notebooks, and notebook instances | 250 hours of ml.t3.medium instance on Studio notebooks OR 250 hours of ml.t2 medium instance or ml.t3.medium instance on notebook instances |

| RStudio on SageMaker | 250 hours of ml.t3.medium instance on RSession app AND free ml.t3.medium instance for RStudioServerPro app |

| Data Wrangler | 25 hours of ml.m5.4xlarge instance |

| Feature Store | 10 million write units, 10 million read units, 25 GB storage (standard online store) |

| Training | 50 hours of m4.xlarge or m5.xlarge instances |

| Amazon SageMaker with TensorBoard | 300 hours of ml.r5.large instance |

| Real-Time Inference | 125 hours of m4.xlarge or m5.xlarge instances |

| Serverless Inference | 150,000 seconds of on-demand inference duration |

| Canvas | 160 hours/month for session time |

| HyperPod | 50 hours of m5.xlarge instance |

| |

Tips to Reduce Your Amazon SageMaker Expenses

Let’s talk about some practical ways to achieve AWS SageMaker cost optimization.

1. Choose the Right Instance Types and Rightsize Based on Actual Workload Metrics

Picking the wrong instance type is one of the fastest ways to overspend in SageMaker, so you should treat instance selection as a

measured decision — not a default. Here’s the

practical, workload-specific process you can use:

- Start on the smallest reasonable class For notebooks and training experiments, begin with ml.t3.medium, ml.m5.large, or ml.c5.large instead of jumping straight to GPU or large CPU instances.

- Use SageMaker Debugger/Profiler to collect real utilization metrics Turn on monitoring to see:

- CPU/GPU utilization

- Disk I/O

- Memory consumption

- Step-by-step training bottlenecks

This will tell you exactly whether you need more compute, more memory, or a GPU at all. - Scale intentionally, not reactively If CPU is pegged at 90%+ → move to compute-optimized (c5, c6i). If memory is the bottleneck → move to memory-optimized (r5, r6i). If GPU is under 30% utilization → downgrade or switch back to CPU.

- Benchmark training duration vs. cost A larger instance isn’t always cheaper. Example: A job on ml.m5.xlarge that runs 3 hours costs more than the same job on ml.c5.2xlarge that finishes in 1 hour. Use small sample training runs to compare actual time × hourly rate.

- Pick GPU families strategically

- Use g4dn or g5 for most DL tasks — cheaper and often faster than older p2/p3.

- Use Inferentia (inf1/inf2) for deployment if model is compatible — massive cost savings for inference.

- Only use p3/p4 if you know you require extreme training performance.

- Re-evaluate instance choice regularly Model sizes, batch sizes, and code optimizations change. Re-check utilization every few iterations to avoid stale, oversized instance selections.

2. Use the Most Cost-Efficient Inference Option for Each Traffic Pattern

Inference is often the biggest long-term SageMaker expense, so choosing the right deployment mode can dramatically reduce costs. Start by understanding how your application actually receives traffic—whether it’s steady, bursty, long-running, or entirely offline.

- Real-Time Endpoints are the most expensive option because instances run 24/7. Use them only when you need strict low-latency responses and have consistent traffic that justifies always-on infrastructure costs.

- Serverless Inference is far more economical for unpredictable or spiky workloads. You pay only for compute during invocations, making it ideal for ML-driven features that sit idle for long stretches.

- Asynchronous Inference works best for large payloads or long-running requests, where keeping a dedicated endpoint online doesn’t make sense.

- Batch Transform is the lowest-cost choice for scheduled or offline prediction jobs, since compute spins up only for the duration of the batch.

Once deployed, use invocation counts, latency metrics, and idle time to confirm whether a mode is actually appropriate. If an endpoint spends more time idle than serving traffic, or if latency headroom is consistently high, it’s usually a sign you can switch to a cheaper inference option without sacrificing performance.

3. Leverage Multi-Model Endpoints (MME) to Consolidate Low-Traffic Models

If you manage many small or infrequently used models, running a separate endpoint for each one is one of the fastest ways to overspend in SageMaker. Multi-Model Endpoints let you host dozens—or even hundreds—of models behind a single endpoint, loading each model from S3 only when it’s needed. This dramatically reduces the number of instances you pay for and increases utilization of the ones you do keep. MMEs are especially effective when each model sees low or uneven traffic, such as per-tenant models, personalized recommendation models, or experiment variants. Instead of dedicating a full-time instance to each, you can consolidate them and allow SageMaker to dynamically load and cache models in memory. Frequently used models stay warm, while rarely used ones don’t consume resources until invoked. To get the most benefit, group models with similar size and latency requirements, monitor model load times to fine-tune caching behavior, and periodically review which models actually require dedicated endpoints. Many teams see 50%+ savings simply by moving long-tail models to MME. You can check out the

AWS Multi-Model Endpoints documentation for more info.

4. Automate Shutdown and Lifecycle Management to Eliminate Idle Compute

Idle SageMaker resources are one of the most common—and most avoidable—sources of ML waste. Notebooks, Studio kernels, training containers, and even full inference endpoints can continue running long after someone has stopped using them. Automating shutdown and lifecycle policies ensures you never pay for compute you’re not actively using. A practical way to do this is by using

SageMaker Lifecycle Configurations to automatically stop notebooks after a defined period of inactivity. For example, you can run a simple script during startup that installs an idle-monitoring daemon and shuts the instance down after 30–60 minutes of no kernel activity. This alone prevents the classic “left open over the weekend” scenario. For real-time inference endpoints, integrate

Application Auto Scaling and scale-to-zero strategies where possible. Even if the endpoint can’t fully scale to zero, you can configure scaling policies that reduce instance count during off-hours or non-peak windows. Teams running daytime-only workloads often cut endpoint costs in half through simple schedule-based scaling. Processing and training should also be time-bound. Use

Amazon EventBridge rules or

SageMaker Pipelines to orchestrate start/stop behavior so that compute exists only during actual execution time. You can also set maximum runtime limits on training jobs to avoid runaway processes that bill for hours longer than intended. Finally, enforce lifecycle policies consistently across environments—dev, staging, and production. Most waste happens in non-prod environments, where experimentation is fast and oversight is low.

5. Optimize and Tier Your Storage to Avoid Silent S3 and EFS Costs

SageMaker depends heavily on S3 and EFS, and these costs accumulate quietly as datasets, checkpoints, and Studio workspace files pile up. Set S3 lifecycle rules to automatically move older datasets and training artifacts into cheaper tiers like Infrequent Access or Glacier. Regularly clean up unused model checkpoints or duplicate datasets, and avoid storing large files in Studio’s EFS environment—which is far more expensive than S3. If you’re using Feature Store, keep as much data as possible in the offline store and prune unused feature groups. Small adjustments here prevent long-term storage bloat and keep recurring costs predictable.

6. Monitor Usage Continuously and Set Proactive Cost Alerts

To keep costs predictable, teams need real visibility into how models, users, and workloads consume resources. Start by using AWS Cost Explorer, CloudWatch metrics, and SageMaker usage dashboards to track endpoint utilization, training duration, idle time, and invocation patterns. From there, set guardrails that surface unexpected behavior early. This is where nOps adds a lot of practical value. nOps provides real-time

anomaly detection that immediately flags unusual SageMaker spend (e.g., a training job running 4× longer than normal). You can also use nOps

Budgets and

Forecasting to predict upcoming SageMaker costs based on historical patterns, catch budget drift before it becomes a problem, and get alerted when you’re likely to exceed expected spend. And because SageMaker costs can be hard to attribute, nOps Unit

Cost Allocation shows which teams, models, or environments are actually driving cost changes—helping you pinpoint the true source of waste. Combined, these tools create a feedback loop: you see cost changes as they happen, you understand

why they’re happening, and you can take action before the bill arrives.

7. Use Savings Plans Strategically for Predictable ML Workloads

If parts of your SageMaker usage run consistently—like production inference endpoints, scheduled training pipelines, or recurring data processing jobs—Savings Plans can lock in significantly lower hourly rates. The key is to apply them only where workload patterns are stable enough to justify a long-term commitment. Review your past 30–90 days of usage to identify steady baseline consumption, and commit only to that amount, not your peaks. This ensures you maximize discounts without overcommitting.

Reduce your AWS Machine Learning Costs with nOps

Running AI workloads on AWS can get expensive fast — especially with fast-growing Bedrock usage, untagged ML resources, or sudden spikes in token consumption. nOps gives you full visibility into your AWS, AI/ML, Kubernetes & SaaS spend all in one pane of glass. For Bedrock specifically, nOps provides model-level cost analysis, token-based usage insights, and AI Model Provider Recommendations — showing where switching to Nova, Llama, OpenAI OSS, or DeepSeek could cut costs while maintaining quality and performance. Across all your AWS services (including SageMaker), nOps helps you:

- Track and forecast ML-related cloud spend

- Detect sudden spikes or anomalies in usage

- Improve cost allocation with tagging and virtual tags

- Identify which teams, environments, or workloads drive cost changes

If you’re scaling AI on AWS, nOps gives you the visibility and actionable insights you need to run efficiently and confidently. nOps manages $2 billion in AWS spend and was recently ranked #1 in G2’s Cloud Cost Management category. Try it out for free with your AWS account by booking a demo today!

Frequently Asked Questions

Let’s dive into some key FAQ for optimizing SageMaker costs while maximizing SageMaker capabilities.

Is Amazon SageMaker expensive?

SageMaker can be expensive if you use large GPU instances, keep endpoints running 24/7, or forget to shut down notebooks. However, with the right instance sizing, inference mode selection, lifecycle automation, and Savings Plans, many teams significantly reduce costs. Pricing depends entirely on workload patterns and resource discipline.

How much does SageMaker cost per hour?

Hourly cost varies widely depending on the instance type and workload. CPU instances like ml.m5.large run around $0.115/hour, while GPU instances such as ml.p3.2xlarge can exceed $3.80/hour. Training, inference, notebooks, and Studio apps all bill separately based on their underlying compute.

How do SageMaker training jobs and inference endpoints get billed?

Jobs are billed per instance-hour from job start until completion, including GPU acceleration. Real-time endpoints bill continuously as long as the endpoint is running, even when idle. Batch Transform and serverless or async inference are billed only for compute consumed during actual processing.

Does SageMaker charge when idle?

Yes. Notebook instances, Studio apps, and real-time inference endpoints continue billing as long as the underlying instance is running. Serverless Inference and Batch Transform avoid idle charges because they bill only during compute execution, not while waiting for requests.

Is SageMaker cheaper than EC2 for machine learning?

It depends on your workflow. EC2 can be cheaper for teams managing everything manually, but SageMaker reduces overhead with managed training, deployment, pipelines, experiment tracking, and autoscaling. The savings often come from operational efficiency rather than raw compute pricing alone.

Does SageMaker charge separately for storage?

Yes. Datasets, model artifacts, and logs stored in Amazon S3 incur S3 storage costs. Studio uses EFS, which is more expensive than S3. Feature Store online storage and high-volume reads/writes also add cost. SageMaker itself doesn’t bill for storage, but the underlying AWS services do.

Can I use Spot Instances with SageMaker to save money?

Yes. SageMaker Managed Spot Training can reduce training costs by 70–90% for workloads that can tolerate interruptions. Amazon automatically provisions Spot capacity and checkpoints progress. It’s ideal for large jobs, hyperparameter tuning, and experimentation where occasional restarts are acceptable.

Are Savings Plans worth it for SageMaker?

Savings Plans deliver meaningful discounts for teams with steady, predictable SageMaker workloads—especially production inference or recurring training pipelines. They’re most effective when you commit only to your baseline usage. Overcommitting reduces flexibility, but right-sizing a commitment can cut costs up to 64%.

Does SageMaker use EC2?

Yes. All SageMaker compute— training, inference endpoints, notebooks, Studio apps—runs on EC2 instances under the hood. You don’t manage the EC2 directly, but you’re billed for the underlying instance-hours. This is why instance choice, uptime, and GPU usage have such a large impact on SageMaker pricing.

What is the difference between SageMaker and Bedrock?

SageMaker is a full machine-learning platform for building, training, and deploying custom models, billed primarily for compute and storage. Bedrock is a managed generative AI service where you pay per token to use foundation models like Claude, Nova, and Llama. SageMaker is ML infrastructure; Bedrock is model access.

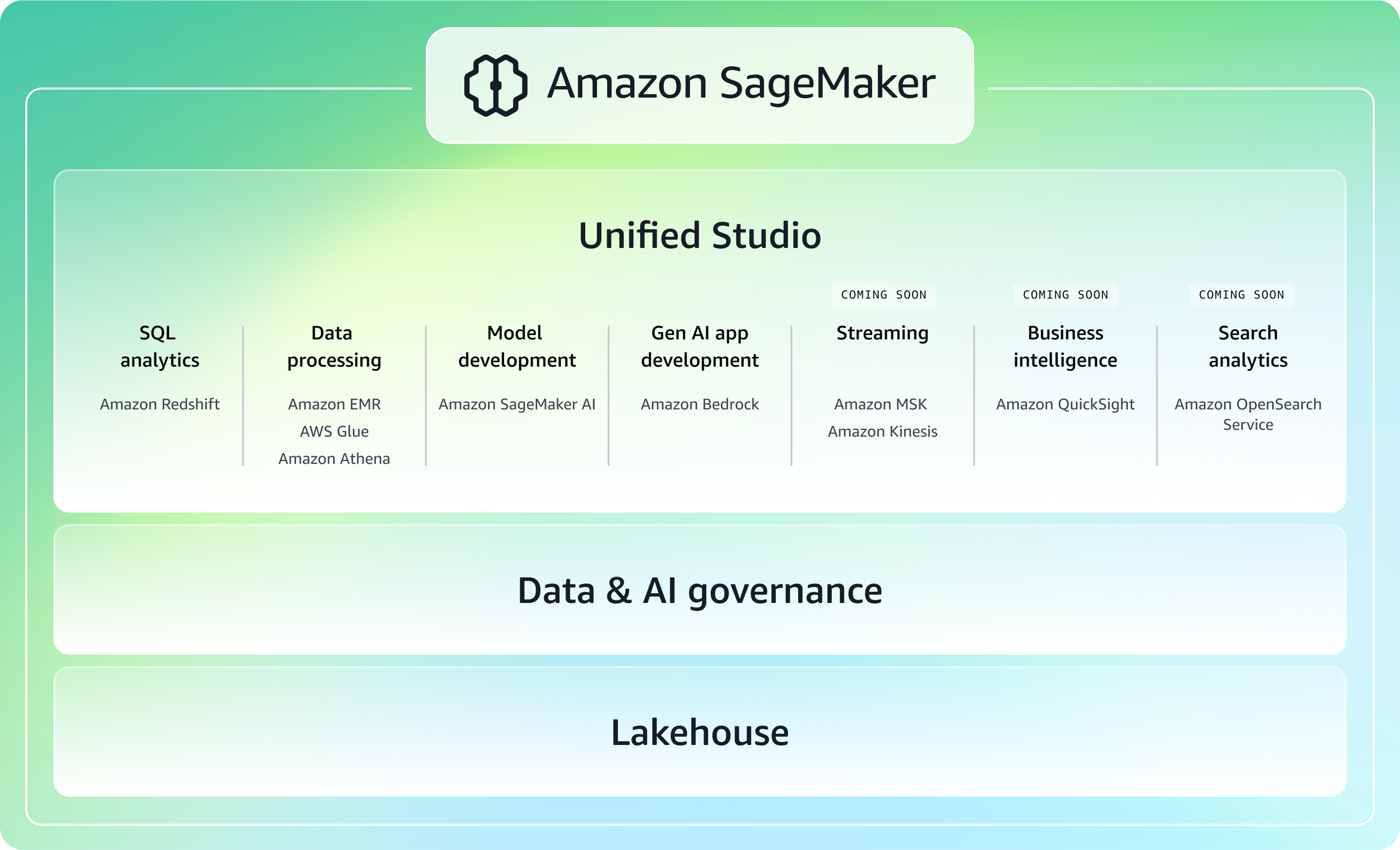

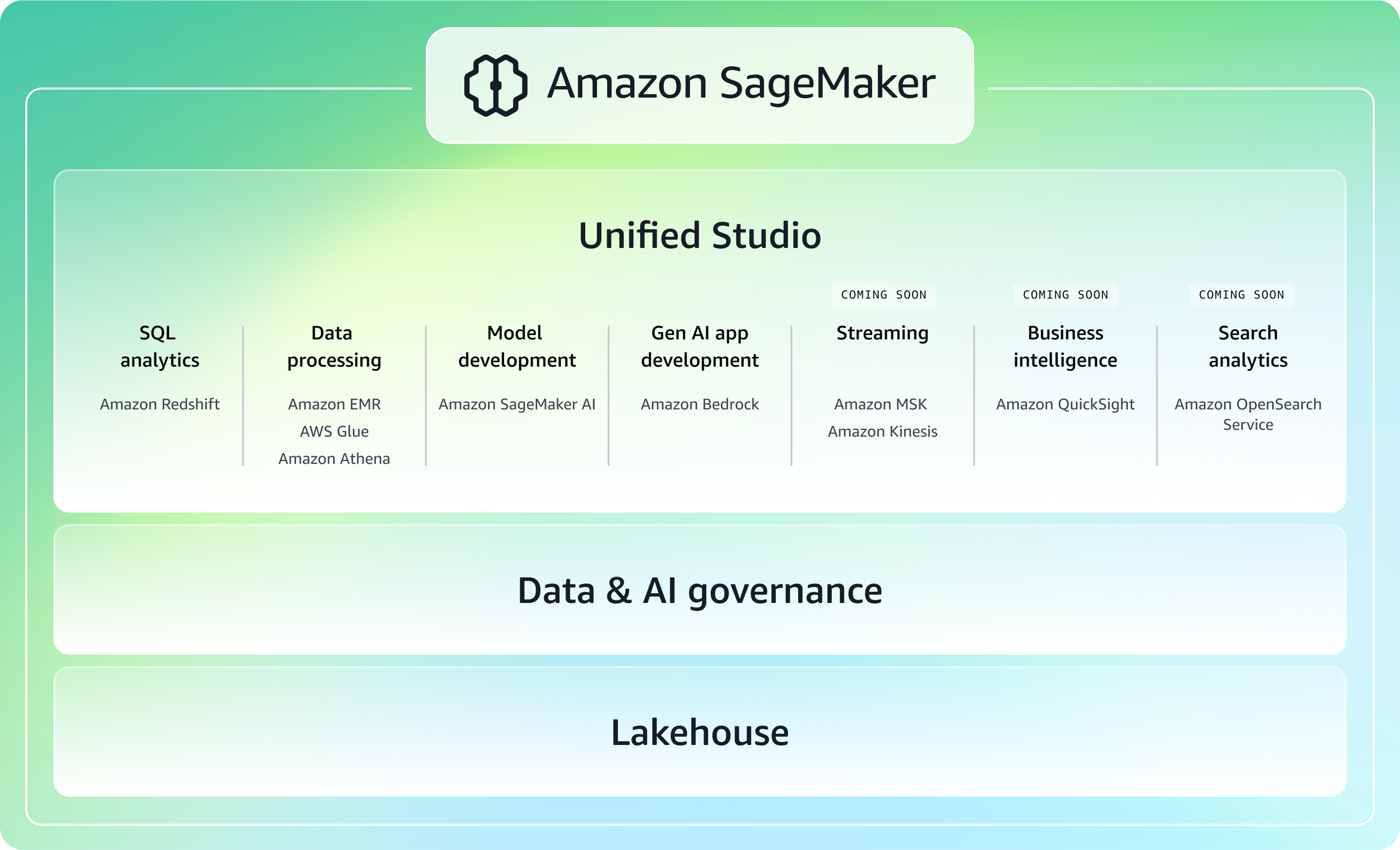

Is SageMaker Unified Studio free?

SageMaker Unified Studio doesn’t have a standalone price, but it’s not “free.” You are billed for the underlying resources it runs—such as notebooks, training instances, inference endpoints, EFS storage, and Data Wrangler sessions.

SageMaker billing is based on a pay-as-you-go model. You pay only for the resources you use—there are no upfront fees or long-term commitments. This on-demand model allows teams to scale their machine learning workflows dynamically, matching costs to actual usage. If you’re unsure whether SageMaker meets your needs, you can start with the Amazon SageMaker Free Tier, which provides a limited amount of compute and storage for testing. This lets teams experiment with features and workflows before committing to larger workloads. From a total cost of ownership (TCO) perspective, SageMaker can help organizations reduce the need for extensive data science infrastructure and specialized personnel. By automating many aspects of the ML lifecycle—such as model training, tuning, and deployment—SageMaker can accelerate development while lowering operational overhead. This makes it a compelling choice for teams looking to scale machine learning efficiently.

SageMaker billing is based on a pay-as-you-go model. You pay only for the resources you use—there are no upfront fees or long-term commitments. This on-demand model allows teams to scale their machine learning workflows dynamically, matching costs to actual usage. If you’re unsure whether SageMaker meets your needs, you can start with the Amazon SageMaker Free Tier, which provides a limited amount of compute and storage for testing. This lets teams experiment with features and workflows before committing to larger workloads. From a total cost of ownership (TCO) perspective, SageMaker can help organizations reduce the need for extensive data science infrastructure and specialized personnel. By automating many aspects of the ML lifecycle—such as model training, tuning, and deployment—SageMaker can accelerate development while lowering operational overhead. This makes it a compelling choice for teams looking to scale machine learning efficiently.